MODIFIED ON: October 10, 2024 / ALIGNMINDS TECHNOLOGIES / 0 COMMENTS

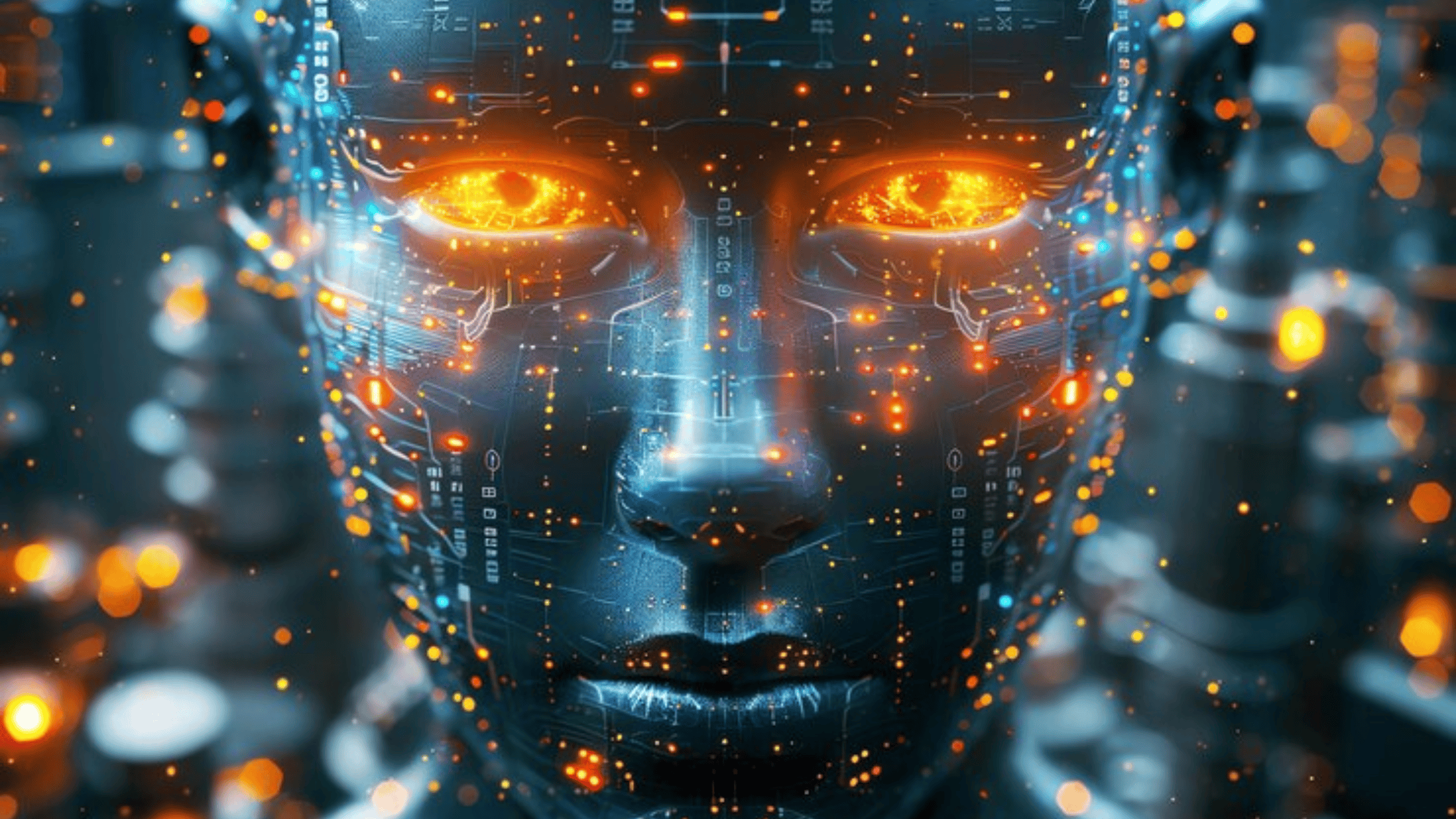

The Generative AI field is experiencing explosive growth, with a projected market size surpassing $36 billion by the end of 2024.

Generative AI is not merely a buzzword—an astounding 92% of Fortune 500 companies, including industry giants like Coca-Cola, Walmart, and Amazon, are already leveraging this technology.

Generative AI isn’t fancy applications; it is driving real-world advantages, with companies reporting a 30-50% increase in productivity. However, achieving the top ranks requires high-quality data and significant resources in data planning.

Nonetheless, optimizing generative AI models is essential to enhance their performance, accuracy, and generalization. McKinsey estimates that the economic impact can be undeniable, with a prospective annual contribution of $6.1 trillion to $7.9 trillion to the international economy.

Generative AI has vast applications, from revolutionizing healthcare with drug discovery and personalized remedies to streamlining fraud detection and risk management. As generative AI continues integrating into our lifestyles, ethical factors like data privacy, bias, and transparency will need careful attention.

This glimpse into generative AI statistics showcases its immense potential and the need for responsible development and deployment. Thus, in this article, we will discuss the top strategies and techniques that can significantly improve your Gen AI models.

Here Are The Steps, Along With Real-World Examples:

1. Model Interpretability and Explainability

Understanding how your AI model makes decisions builds trust and aids in a debugging.

Real-World Example: A healthcare organization uses SHAP to understand why a deep learning model predicted a patient at high risk for heart disease. SHAP reveals that the model considers factors like age, blood pressure, and cholesterol levels, helping doctors gain insights into decision-making.

Data Point: According to a 2022 study, model interpretability tools helped increase physician trust in AI diagnostics by 35%.

2. Data Preprocessing for AI and Cleaning

The quality of the input data directly impacts the performance metrics for AI models, so thorough cleaning and preprocessing are crucial.

Real-World Example: A company working on image classification for autonomous vehicles removes outliers, such as images with occlusions or unusual lighting conditions. Normalization ensures consistent pixel values, improving model accuracy and stability.

Data Point: Studies show that proper data cleaning can improve Performance metrics for AI models by up to 50%.

3. Hyperparameter Tuning and Fine-tuning

Adjusting hyperparameters, such as learning rates and batch sizes, can significantly affect the performance of generative AI models.

Real-World Example: A researcher experimenting with a GAN for image generation uses grid search to find the optimal learning rate and batch size. By testing different combinations, they achieve much better image quality.

Data Point: A well-tuned hyperparameter set can lead to a 20% improvement in model accuracy.

4. Regularization Techniques

Regularization methods in AI prevent overfitting, helping models generalize well on unseen data.

Real-World Example: A language model trained on a large dataset risks overfitting, producing repetitive or nonsensical text. The model randomly deactivates neurons during training by applying dropout, ensuring it doesn’t rely too heavily on specific patterns and improves its overall robustness.

Data Point: Applying dropout with a rate of 0.5 can reduce overfitting by 25%.

5. Model Architecture Optimization

Modifying your model’s architecture can greatly enhance its ability to capture complex patterns.

Real-World Example: A company developing a text-to-image model experiments with different architectures. Adding residual connections and attention mechanisms achieves more detailed and coherent image generation.

Data Point: Residual connections have improved convergence rates by 20%.

6. Advanced Optimization Algorithms

Using advanced optimization algorithms can speed up convergence and stabilize model training.

Real-World Example: A researcher training a large-scale language model finds that the Adam optimizer converges faster than SGD, making the training process more efficient and yielding better results.

Data Point: The Adam optimizer can reduce training time by up to 30% compared to traditional methods.

7. Transfer Learning and Fine-tuning Pre-trained Models

Pre-trained models can save time and computational resources, particularly when dealing with limited data.

Real-World Example: A startup developing a medical image classification model uses a pre-trained ResNet architecture. Fine-tuning it on a smaller dataset of medical images allows them to achieve high accuracy while saving time and resources.

Data Point: Transfer learning can result in a 50% reduction in training time and still achieve high accuracy.

8. Monitoring and Evaluation

Regular monitoring helps identify issues such as overfitting and underfitting early in the training process.

Real-World Example: A company uses TensorBoard to track its recommendation system’s training loss and accuracy. By visualizing these metrics in real time, they adjust their strategy when signs of overfitting appear, ensuring optimal performance.

Data Point: Real-time monitoring with tools like TensorBoard can improve model performance by up to 40%.

9. Ensemble Methods

Combining multiple models can reduce errors and increase prediction accuracy.

Real-World Example: A financial institution uses bagging to combine predictions from multiple models in its fraud detection system. This reduces the risk of false positives and negatives, making the system more reliable.

Data Point: Ensemble methods can reduce error rates by approximately 20-30%.

10. Hardware Acceleration

Leveraging the power of GPUs or TPUs can dramatically decrease training times, enabling faster experimentation.

Real-World Example: A research lab training a large-scale language model accelerates the process using GPUs. The parallel processing capabilities significantly reduce training time, allowing for more iterations and experimentation.

Data Point: GPUs can speed up training times by up to 58 times compared to traditional CPUs.

11. Stay Updated with New Techniques

Keeping up with the latest AI developments is essential to stay competitive.

Real-World Example: A company uses transformer architectures like GPT-3 in its language models. This adoption allows it to generate more coherent and contextually relevant text, improving the quality of its AI products.

Data Point: Companies that adopt new AI techniques report up to a 25% increase in model performance and efficiency.

AlignMinds: Your AI Optimization Partner

AlignMinds – an AI Development Company in the US with a presence in India are here to help you navigate through the full potential of generative AI. Our proven strategies can optimize your Generative AI models for peak performance.

Key Areas of Focus:

Model Interpretability in AI & Explainability: Build trust and understand model decision-making. Data Preprocessing for AI & Cleaning: Ensure high-quality data for optimal performance. Hyperparameter Tuning & Fine-tuning: Fine-tune your model for optimal results.

And more! Explore advanced techniques like:

> Regularization Methods in AI

> Model Architecture Optimization

> Hardware Acceleration

Stay Ahead of the Curve

The AI ecosystem is constantly evolving. Speak to our experts at AlignMinds today to stay updated with the latest trends to ensure your Generative AI models remain efficient and competitive.

Book A Free Consultation! Partner with AlignMinds to discover the true potential of generative AI now!

Leave a reply

Your email address will not be published.

-

Recent Posts

- The Role of AI in Business Growth: Top Trends for 2025 and Beyond

- The Evolution of Voice Search in AI: What’s Next for 2025?

- How to Hire an AI Developer: A Complete Guide 2025

- Top 10 Android App Development Trends in 2025

- Top Trends in Product Modernization for 2025 and Beyond

-

Categories

- MVP Development (5)

- AlignMinds (56)

- Operating Systems (1)

- Android POS (3)

- Application Hosting (1)

- Artificial Intelligence (49)

- Big Data (2)

- Blockchain (1)

- Cloud Application Development (8)

- Software Development (39)

- Software Testing (9)

- Strategy & User Experience Design (4)

- Web Application Development (28)

- Cyber Security (6)

- Outsourcing (7)

- Programming Languages (3)

- DevOps (5)

- Software Designing (6)

- How to Code (4)

- Internet of Things (1)

- Machine Learning (2)

- Mobile App Marketing (5)

- Mobile Application Development (25)

- Mobile Applications (11)