MODIFIED ON: November 29, 2022 / ALIGNMINDS TECHNOLOGIES / 0 COMMENTS

The last decade was a victim of a big blast in the tech-Industry by the introduction of technologies like Wearable Computers, Ultra-private devices, Devops, Software-defined data centres, Big Data, Smart Mobiles, Cloud Computing, etc. Out of this, Big Data is becoming the next big thing in the IT world.

There is something that is so big that we can’t avoid it, even if we want to. “Big Data” is one of those things.

The Big Data is not just a group of data, but different types of data are handled in new ways. Big Data is nothing but a collection of vast and complex data that it becomes very tedious to capture, store, process, retrieve and analyze it with the help of on-hand database management tools or traditional data processing techniques.

Giant companies like Amazon and Wal-Mart as well as organizations such as the U.S. government and NASA are using Big Data to meet their business. Big Data can also play a role for small or medium-sized companies and organizations that recognize the possibilities to capitalize upon the gains.

Why Big Data?

Big Data is demanded for:

- Increase of storage capacity

- Increase of processing power

- Availability of Data (different data type)

The three V’s in Big Data

The three V’s “volume, velocity and variety” concepts invented by Doug Laney in 2001 to refer to the challenge of data management. Big Data is high-volume, velocity and variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making.

3 V’s of BIG DATA

1. Volume

Volume refers to the vast amount of data generated every second. (A lot of data, more than can easily be handled by a single database, computer or spreadsheet)

2. Velocity

It refers to the speed at which new data is generated and the speed at which data moves around.

3. Variety

It refers to the different kinds of information in each record, lacking inherent structure or predictable size, rate of arrival, transformation, or analysis when processed.

Additionally, a new V, “Veracity” is added by some organizations to describe it.

4. Veracity

Veracity refers to the reliability and difficulty of the data. The quality of the data being captured can vary greatly. Accuracy of analysis depends on the veracity of the source data.

Big Data Analysis: Some recent technologies

Companies are depending on the following technologies to do Big Data analysis:

- Speedy and efficient processors.

- Modern storage and processing technologies, especially for unstructured data

- Robust server processing capacities

- Cloud computing

- Clustering, high connectivity, parallel processing, MPP

- Apache Hadoop/ Hadoop Big Data

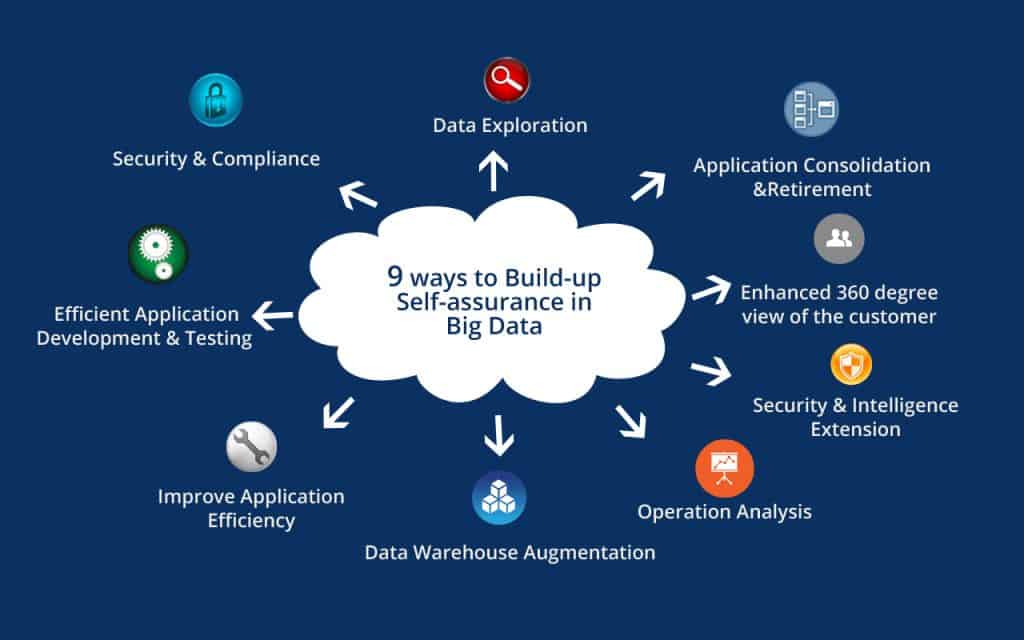

9 ways to build-up Self–Assurance in Big Data

The various process to build up courage in Big Data

1. Data Exploration

Big Data exploration permits to discover and mine Big Data to find, Visualize and understand all Big Data to improve decision making.

2. Application Consolidation and Retirement

File the old application data and update new application deployment with test data management, integration and data quality.

3. Enhanced 360-degree view of the customer

It allows customer-facing professionals with improved and accurate information to involve customers to develop trusted relationships and improve loyalty. To gain that 360-degree view of the customer, the organization needs to force internal and external sources with structured and unstructured data.

4. Security and Intelligence Extension

The increasing numbers of crimes – cyber-based terrorism and computer interruptions posters a real threat to every individual and organization. To meet the security challenges, businesses need to enhance security platforms with Big Data technologies to process and analyze new data types and sources of under-influenced data.

5. Operations Analysis

It analyzes a variety of machine data for improved business results.

6. Data Warehouse Augmentation

Data Warehouse Modernization (formerly known as Data Warehouse Augmentation) is about building on an existing data warehouse infrastructure, influencing Big Data technologies to ‘augment’ its capabilities. It integrates Big Data and data warehouse capabilities to increase operational efficiency.

7. Improve Application Efficiency

Manage data growth, improve performance, and lower the cost for mission-critical applications.

8. Efficiency Application Development and Testing

It creates and maintains right-sized development, test and training environments.

9. Security and Compliance

It protects data, improves data integrity, and moderate opening risks and lower compliance costs.

If you arrange a system which works through all those stages to arrive at this target, then congratulation!!!

You’re in Big Data.

And hopefully, ready to start reaping the benefits!

-

Recent Posts

- 2024 Tech Trends: Gen AI and App Development Insights

- Generative AI Trends Shaping Mobile & Web Apps in 2025

- Strategic Investment in Scalable Web Applications for 2025

- App Development Challenges 2025 and AlignMinds Solutions

- AI for Personalized Mobile App Success in 2025

-

Categories

- MVP Development (5)

- AlignMinds (55)

- Operating Systems (1)

- Android POS (3)

- Application Hosting (1)

- Artificial Intelligence (43)

- Big Data (2)

- Blockchain (1)

- Cloud Application Development (7)

- Software Development (35)

- Software Testing (9)

- Strategy & User Experience Design (4)

- Web Application Development (27)

- Cyber Security (6)

- Outsourcing (7)

- Programming Languages (3)

- DevOps (5)

- Software Designing (6)

- How to Code (4)

- Internet of Things (1)

- Machine Learning (2)

- Mobile App Marketing (5)

- Mobile Application Development (23)

- Mobile Applications (8)